SICK Robot Day 2010.

International competition for mobile robot. The challenge, organized by SICK AG, will be some kind of orienteering race. The course is a convex arena about 20 x 30 meters, which has marked targets at its boundary. These targets, number from 0 to 9, have to be identified autonomously and approached in correct order. Inside this area there are several obstacles. At a time two vehicles are starting concurrently (into opposite direction). The event will take place on Saturday, October 2, 2010 in the Stadthalle in Waldkirch (Germany). For other specifics view Robot Day general rule.

Vehicle and hardware.

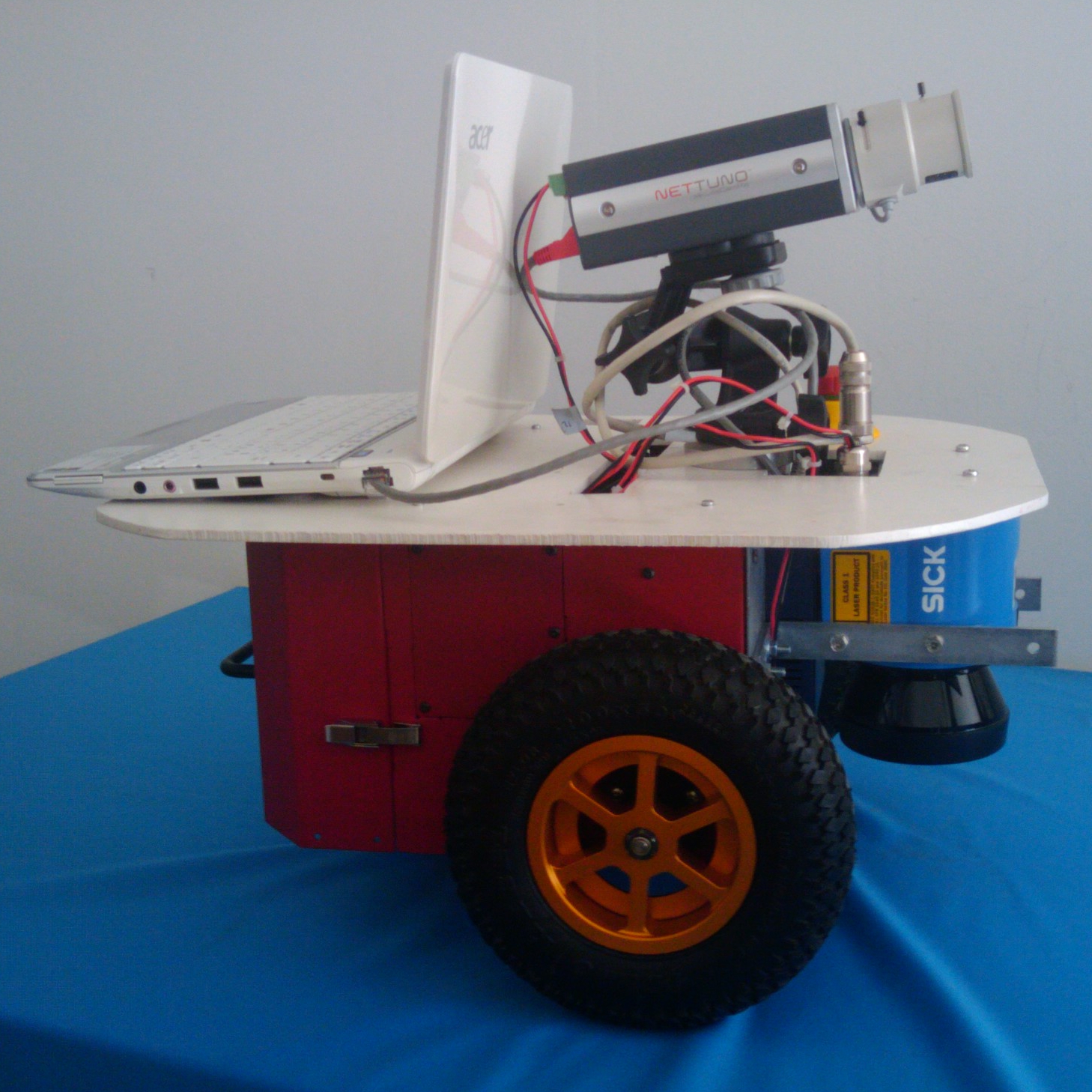

The mobile robot used for competition is a Pioneer P3DX, differential-drive robot for academic research. It's fully assembled with two tractor wheels, maximum translate speed of 1400 mm/sec and rotational speed 300 deg/sec.

The mobile robot used for competition is a Pioneer P3DX, differential-drive robot for academic research. It's fully assembled with two tractor wheels, maximum translate speed of 1400 mm/sec and rotational speed 300 deg/sec.

For obstacle and area's boundary detection, we used a laser measurement system sensors, in detail a SICK LMS100. It covers an arc of 270° with commonly 0.5° resolution per scan, maximum range of 20 meters and a maximum sample rate of 50Hz.

For obstacle and area's boundary detection, we used a laser measurement system sensors, in detail a SICK LMS100. It covers an arc of 270° with commonly 0.5° resolution per scan, maximum range of 20 meters and a maximum sample rate of 50Hz.

Markers are spotted by a March Networks VideoSphere CamPX. It's a colour digital camera with image frame rate of 25/30 fps, 720 x 576 maximum resolution, Ethernet connection.

Markers are spotted by a March Networks VideoSphere CamPX. It's a colour digital camera with image frame rate of 25/30 fps, 720 x 576 maximum resolution, Ethernet connection.

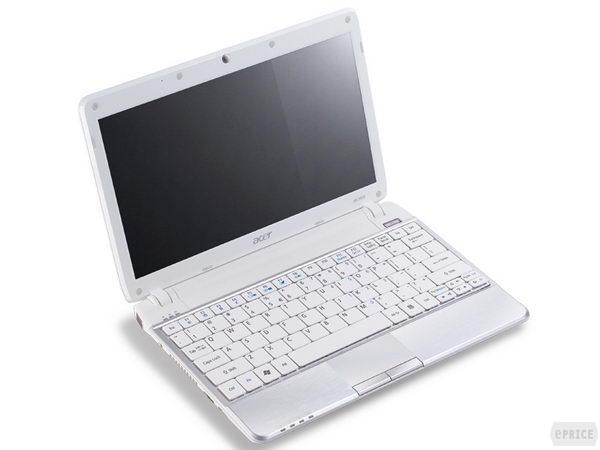

The sensors data analysis (from laser and camera) and the command dispatch to motors, is performed by the notebook Aspire Timeline 1810TZ. Some technical specifications: Intel® Core™2 Duo processor SU7300 (3 MB L2 cache, 1.20 GHz), Dual-channel DDR2 SDRAM memory support, 400 GB hard disk drive, display 11.6" HD with 1366 x 768 pixel resolution.

The sensors data analysis (from laser and camera) and the command dispatch to motors, is performed by the notebook Aspire Timeline 1810TZ. Some technical specifications: Intel® Core™2 Duo processor SU7300 (3 MB L2 cache, 1.20 GHz), Dual-channel DDR2 SDRAM memory support, 400 GB hard disk drive, display 11.6" HD with 1366 x 768 pixel resolution.

Architecture and software.

To complete the race, the robot must have several skills: marker detection and classification, map estimation, sensor fusion in order to achieve an intelligent behaviour. An “ad hoc” system has been designed and implemented from scratch.

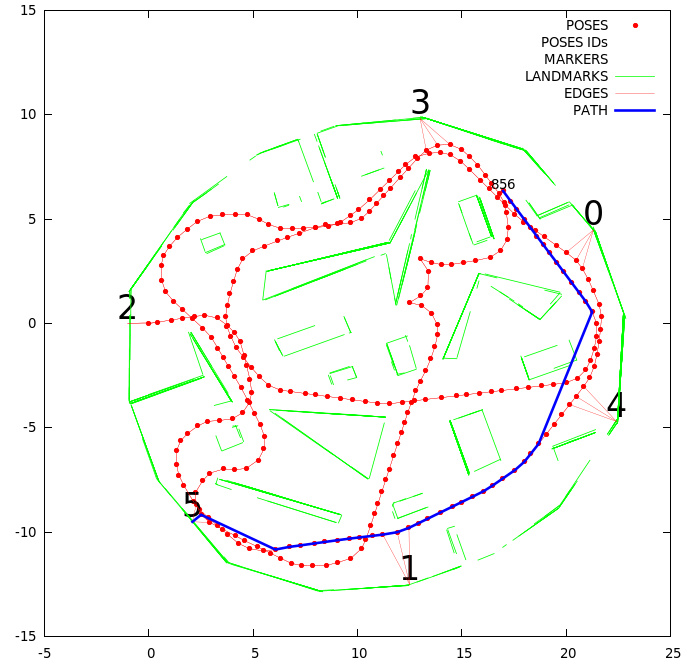

All acquired observations are processed to achieve a graph-based representation of the explored environment. In particular, robot poses, line features extracted from laser scans and numeric markers are stored together.

Mapping task supports efficient robot navigation.

All acquired observations are processed to achieve a graph-based representation of the explored environment. In particular, robot poses, line features extracted from laser scans and numeric markers are stored together.

Mapping task supports efficient robot navigation.

Marker recognition is performed using a combination of filters on the source image drawn

by camera and an intelligent classification of the number. The principal aim of this software module is to obtain the region of interest, from which are extracted the number's attributes. These are sent to two different calssifiers: a multiple boost and a rtree classifier.

Marker recognition is performed using a combination of filters on the source image drawn

by camera and an intelligent classification of the number. The principal aim of this software module is to obtain the region of interest, from which are extracted the number's attributes. These are sent to two different calssifiers: a multiple boost and a rtree classifier.

Laser measurements and map data provide a description of current setting. With this information, the robot decides which behaviour takes the motor control.

Laser measurements and map data provide a description of current setting. With this information, the robot decides which behaviour takes the motor control.

Team.

Team consists of 5 students led by Dario Lodi Rizzini, under the supervision of Prof Stefano Caselli:Gionata Boccalini: Responsible for map design, sensor fusion, behaviour programming.

Andrea De Pasquale: Responsible for software architecture, sensor fusion, behaviour programming.

Mirco Golutti: Responsible for hardware design, behaviour programming.

Filippo La Marchina: Responsible for visual marker recognition.

Lorenzo Rachetti: Responsible for hardware design, behaviour programming.

6th Placement.